We explore the task of predicting the preposition that best expresses the relation between two visual entities.

More specifically, given a trajector entity and a landmark entity and their location and size in an image, predict the most suitable preposition that connects these two entities (as used in human-authored image descriptions).

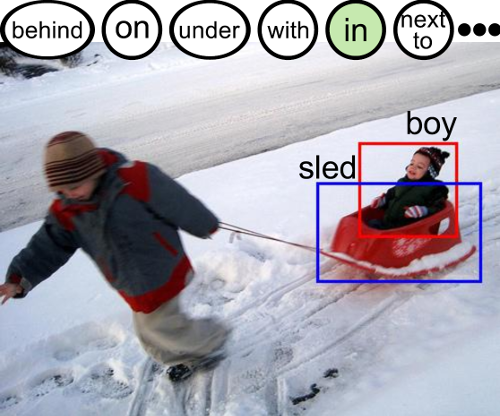

For example, for the instance "boy ___ sled", where "boy" is the trajector and "sled" the landmark, select the best preposition to fill in the blank given the category labels and their corresponding bounding boxes.